Generative AI UX: Version Control Starts With the “Perfect Result

Daria Kartseva, UX Designer, November 2025

Generative AI is not a magic button that gives you the perfect result every time.

It’s more like a lottery.

One prompt hits the target, another one fails, the next is “almost there,” and sometimes the model just breaks.

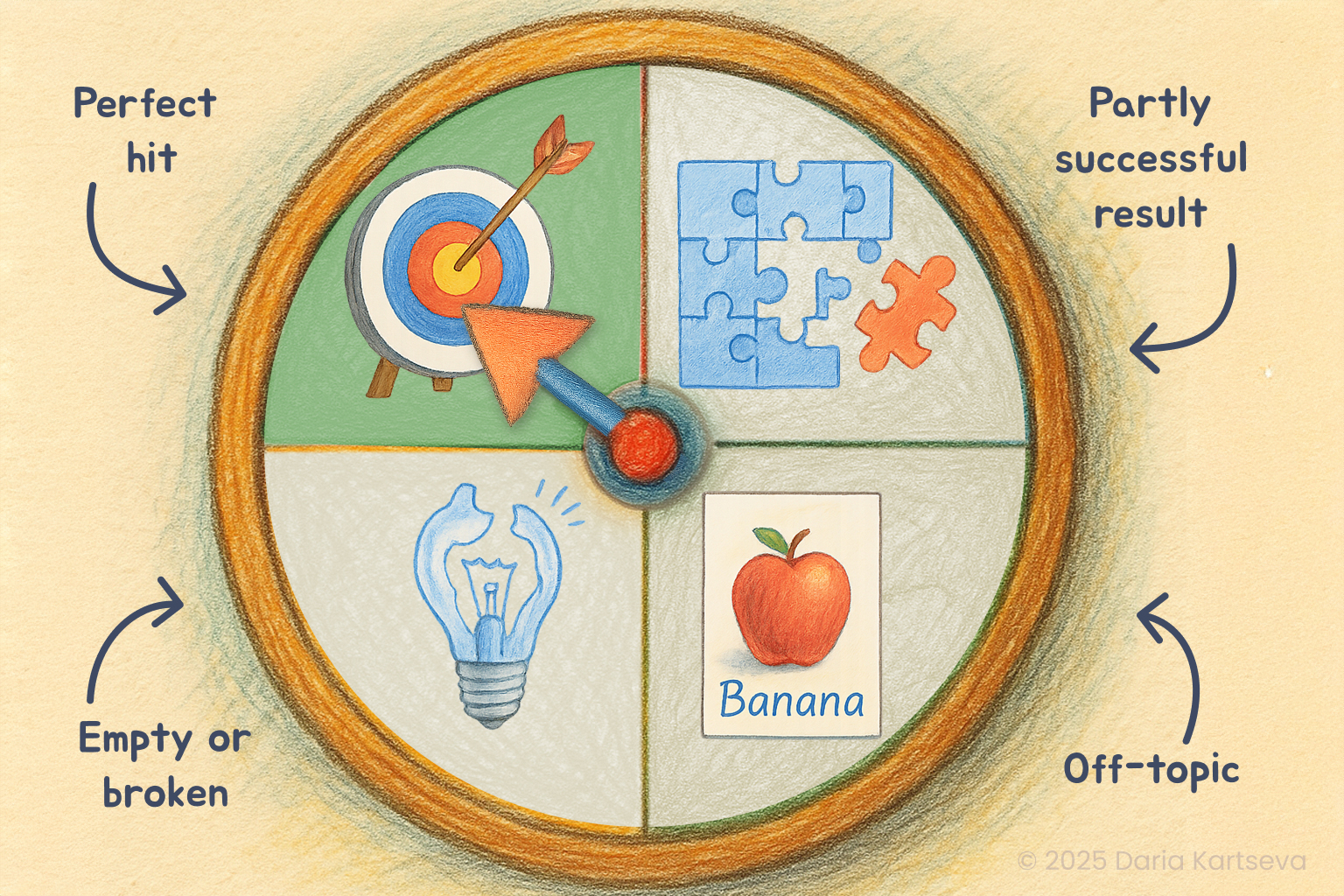

If we look at user experience, there are four common outcomes:

For UX, these are not four different products - they are four states of the same process.

A user can go through all of them in one evening.

That’s why the interface should not promise “only perfect results.”

It should help people recover safely from any outcome.

Today we talk about the first one - when the model gets it right.

It seems like the work is done.

But actually, it’s just starting.

It’s more like a lottery.

One prompt hits the target, another one fails, the next is “almost there,” and sometimes the model just breaks.

If we look at user experience, there are four common outcomes:

- Perfect hit - exactly what the user wanted.

- Partly successful result - some useful parts, but needs more work.

- Off-topic - not what was asked for.

- Empty or broken - generation failed completely.

For UX, these are not four different products - they are four states of the same process.

A user can go through all of them in one evening.

That’s why the interface should not promise “only perfect results.”

It should help people recover safely from any outcome.

Today we talk about the first one - when the model gets it right.

It seems like the work is done.

But actually, it’s just starting.

Why “perfect” is not the end

Even when the AI gives a great image, text, song, or slide - users rarely stop.

They want to compare, check, and save versions.

They want to be sure it wasn’t just luck.

That’s why the interface must support working not with one result, but with many versions.

They want to compare, check, and save versions.

They want to be sure it wasn’t just luck.

That’s why the interface must support working not with one result, but with many versions.

The basic tools for version management

Basic actions like downloading or sharing are a given. But for versions to function as part of the workflow, the interface needs additional tools:

And it’s important that the interface makes this clear: users often assume feedback changes future outputs, when in reality it’s only a personal sorting tool.

Still, these signals point toward a future where systems may use such reactions more directly - adapting not just to prompts, but to consistent user preferences.

- Likes or tags. Mark results as “good” or “bad.”

And it’s important that the interface makes this clear: users often assume feedback changes future outputs, when in reality it’s only a personal sorting tool.

Still, these signals point toward a future where systems may use such reactions more directly - adapting not just to prompts, but to consistent user preferences.

- Notes. Let users write short comments for each version - like “good rhythm,” “nice light,” or “better tone.”

- Current favorite. A quick way to mark the best version so far.

- History and undo. The option to go back without losing context.

- Сatalog and filters. Search by time or features - for example: “show versions from yesterday” or “dark backgrounds only.”

With these tools, even if the user already has a good result, they can keep exploring without fear of losing progress.

Why this matters

A perfect output does not automatically create trust.

Trust appears when the interface lets the user:

Good version management makes AI feel less like roulette - and more like a tool you can rely on.

Trust appears when the interface lets the user:

- compare different results;

- see that success is repeatable;

- return to the right version later;

- build their own workflow on top of an unpredictable system.

Good version management makes AI feel less like roulette - and more like a tool you can rely on.

What’s next

This was the easiest case - when AI gets it right.

Next comes the more complicated one: What if the result is almost good, but needs changes?

How can users tell the model what exactly to fix?

And what does “editability” even mean in a probabilistic system?

That’s what we’ll explore in Part 2.

Next comes the more complicated one: What if the result is almost good, but needs changes?

How can users tell the model what exactly to fix?

And what does “editability” even mean in a probabilistic system?

That’s what we’ll explore in Part 2.

Related articles

Designing for Uncertainty: How UX Shapes the AI Experience | UX for Generative Systems

AI is unpredictable — but UX can make it understandable. Learn how design helps users navigate uncertainty, set expectations, and build trust with generative AI tools.

Designing UX for AI tools: turning “magic” into clear expectations

How to help users understand what AI can and can’t do. UX solutions that make generative AI clear, transparent, and comfortable to use.

When AI Rules Change Between Products

How different AI services interpret prompts differently, why user experience does not transfer between tools, and how UX can protect user expectations.

Kartseva Daria

Phone: +358 40 170 33 53 (fi)

E-mail: kartseva.daria@gmail.com

E-mail: kartseva.daria@gmail.com